Vision Algorithms for Embedded Vision

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language

Most computer vision algorithms were developed on general-purpose computer systems with software written in a high-level language. Some of the pixel-processing operations (ex: spatial filtering) have changed very little in the decades since they were first implemented on mainframes. With today’s broader embedded vision implementations, existing high-level algorithms may not fit within the system constraints, requiring new innovation to achieve the desired results.

Some of this innovation may involve replacing a general-purpose algorithm with a hardware-optimized equivalent. With such a broad range of processors for embedded vision, algorithm analysis will likely focus on ways to maximize pixel-level processing within system constraints.

This section refers to both general-purpose operations (ex: edge detection) and hardware-optimized versions (ex: parallel adaptive filtering in an FPGA). Many sources exist for general-purpose algorithms. The Embedded Vision Alliance is one of the best industry resources for learning about algorithms that map to specific hardware, since Alliance Members will share this information directly with the vision community.

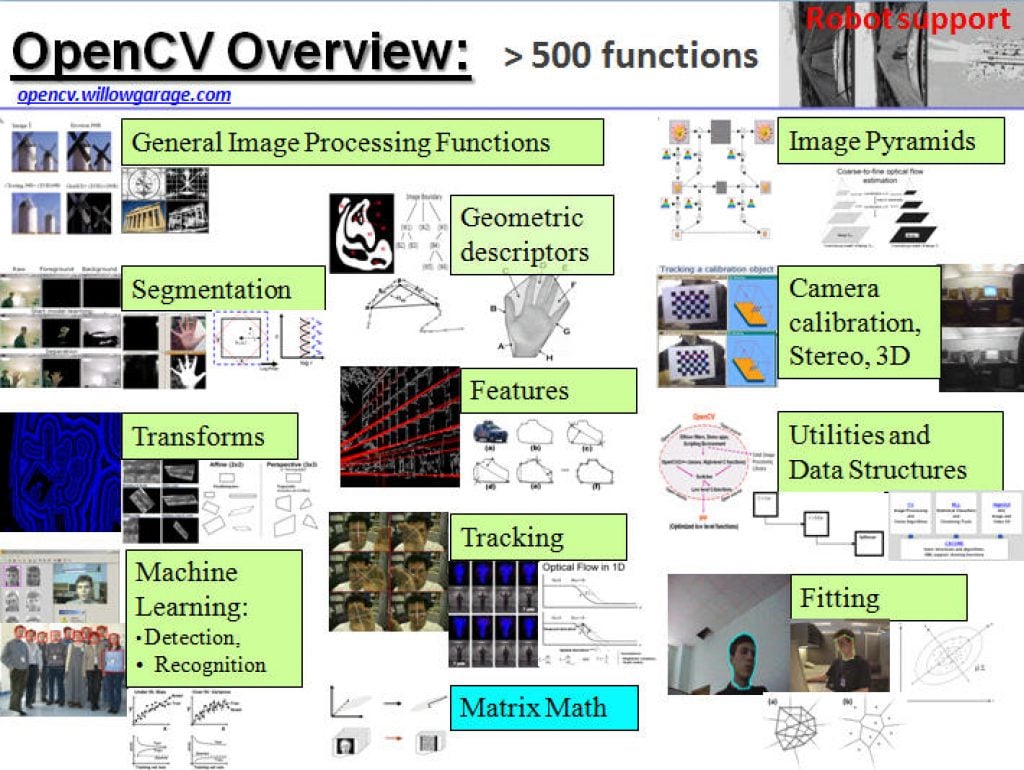

General-purpose computer vision algorithms

One of the most-popular sources of computer vision algorithms is the OpenCV Library. OpenCV is open-source and currently written in C, with a C++ version under development. For more information, see the Alliance’s interview with OpenCV Foundation President and CEO Gary Bradski, along with other OpenCV-related materials on the Alliance website.

Hardware-optimized computer vision algorithms

Several programmable device vendors have created optimized versions of off-the-shelf computer vision libraries. NVIDIA works closely with the OpenCV community, for example, and has created algorithms that are accelerated by GPGPUs. MathWorks provides MATLAB functions/objects and Simulink blocks for many computer vision algorithms within its Vision System Toolbox, while also allowing vendors to create their own libraries of functions that are optimized for a specific programmable architecture. National Instruments offers its LabView Vision module library. And Xilinx is another example of a vendor with an optimized computer vision library that it provides to customers as Plug and Play IP cores for creating hardware-accelerated vision algorithms in an FPGA.

Other vision libraries

- Halcon

- Matrox Imaging Library (MIL)

- Cognex VisionPro

- VXL

- CImg

- Filters

Vector Databases: Unlock the Potential of Your Data

This blog post was originally published at Tenyks’ website. It is reprinted here with the permission of Tenyks. In the field of artificial intelligence, vector databases are an emerging database technology that is transforming how we represent and analyze data by using vectors — multi-dimensional numerical arrays — to capture the semantic relationships between data

The Guide to Fine-tuning Stable Diffusion with Your Own Images

This article was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. Have you ever wished you were able to try out a new hairstyle before finally committing to it? How about fulfilling your childhood dream of being a superhero? Maybe having your own digital Funko Pop to use as

“Practical Approaches to DNN Quantization,” a Presentation from Magic Leap

Dwith Chenna, Senior Embedded DSP Engineer for Computer Vision at Magic Leap, presents the “Practical Approaches to DNN Quantization” tutorial at the May 2023 Embedded Vision Summit. Convolutional neural networks, widely used in computer vision tasks, require substantial computation and memory resources, making it challenging to run these models on… “Practical Approaches to DNN Quantization,”

FRAMOS Launches Event-based Vision Sensing (EVS) Development Kit

[Munich, Germany / Ottawa, Canada , 4 October] — FRAMOS launched the FSM-IMX636 Development Kit, an innovative platform allowing developers to explore the capabilities of Event-based Vision Sensing (EVS) technology and test potential benefits of using the technology on NVIDIA® Jetson with the FRAMOS sensor module ecosystem. Built around SONY and PROPHESEE’s cutting-edge EVS technology,

“Optimizing Image Quality and Stereo Depth at the Edge,” a Presentation from John Deere

Travis Davis, Delivery Manager in the Automation and Autonomy Core, and Tarik Loukili, Technical Lead for Automation and Autonomy Applications, both of John Deere, present the “Reinventing Smart Cities with Computer Vision” tutorial at the May 2023 Embedded Vision Summit. John Deere uses machine learning and computer vision (including stereo… “Optimizing Image Quality and Stereo

CircuitSutra Technologies Demonstration of Virtual Prototyping for Pre-silicon Software Development

Umesh Sisodia, President and CEO of CircuitSutra Technologies, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Sisodia demonstrates a virtual prototype of an ARM Cortex-based SoC, developed using SystemC and the CircuitSutra Modelling Library (CSTML). It is able to boot Linux

“Using a Collaborative Network of Distributed Cameras for Object Tracking,” a Presentation from Invision AI

Samuel Örn, Team Lead and Senior Machine Learning and Computer Vision Engineer at Invision AI, presents the “Using a Collaborative Network of Distributed Cameras for Object Tracking” tutorial at the May 2023 Embedded Vision Summit. Using multiple fixed cameras to track objects requires a careful solution design. To enable scaling… “Using a Collaborative Network of

ProHawk Technology Group Overview of AI-enabled Computer Vision Restoration

Brent Willis, Chief Operating Officer of the ProHawk Technology Group, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Willis discusses the company’s AI-enabled computer vision restoration technology. ProHawk’s patented algorithms and technologies enable real-time, pixel-by-pixel video restoration, overcoming virtually all environmental

DeGirum Demonstration of Streaming Edge AI Development and Deployment

Konstantin Kudryavtsev, Vice President of Software Development at DeGirum, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Kudryavtsev demonstrates streaming edge AI development and deployment using the company’s JavaScript and Python SDKs and its cloud platform. On the software front, DeGirum

Cadence Demonstrations of Generative AI and People Tracking at the Edge

Amol Borkar, Director of Product and Marketing for Vision and AI DSPs at Cadence Tensilica, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Borkar demonstrates two applications running on customers’ SoCs, showcasing Cadence’s pervasiveness in AI. The first demonstration is of

From ‘Smart’ to ‘Useful’ Sensors

Can anyone make ‘smart home’ devices useful to consumers? A Calif. startup, Useful Sensors, believes it has the magic potion. What’s at stake? Talk of edge AI, particularly machine learning, has captivated the IoT market. Yet, actual consumer products with local machine learning capabilities, are rare. Who’s ready to pull that off? Will it be

“A Survey of Model Compression Methods,” a Presentation from Instrumental

Rustem Feyzkhanov, Staff Machine Learning Engineer at Instrumental, presents the “Survey of Model Compression Methods” tutorial at the May 2023 Embedded Vision Summit. One of the main challenges when deploying computer vision models to the edge is optimizing the model for speed, memory and energy consumption. In this talk, Feyzkhanov… “A Survey of Model Compression

Unleashing LiDAR’s Potential: A Conversation with Innovusion

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The market for LiDAR in automotive applications is expected to reach US$3.9 billion in 2028 from US$169 million in 2022, representing a 69% Compound Annual Growth Rate (CAGR). According to Yole Intelligence’s

The History of AI: How Generative AI Grew from Early Research

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. From how AI started to how it impacts you today, here’s your comprehensive AI primer When you hear Artificial Intelligence (AI): Do you think of the Terminator or Data on “Star Trek: The Next Generation”? While neither

“Reinventing Smart Cities with Computer Vision,” a Presentation from Hayden AI

Vaibhav Ghadiok, Co-founder and CTO of Hayden AI, presents the “Reinventing Smart Cities with Computer Vision” tutorial at the May 2023 Embedded Vision Summit. Hayden AI has developed the first AI-powered data platform for smart and safe city applications such as traffic enforcement, parking and asset management. In this talk,… “Reinventing Smart Cities with Computer

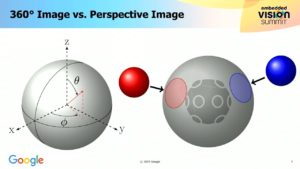

“Learning for 360° Vision,” a Presentation from Google

Yu-Chuan Su, Research Scientist at Google, presents the “Learning for 360° Vision,” tutorial at the May 2023 Embedded Vision Summit. As a core building block of virtual reality (VR) and augmented reality (AR) technology, and with the rapid growth of VR and AR, 360° cameras are becoming more available and… “Learning for 360° Vision,” a