Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

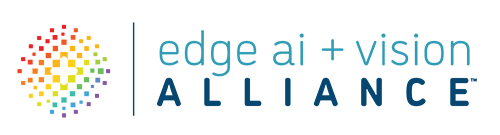

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

How NVIDIA and e-con Systems are Helping Solve Major Challenges In the Retail Industry

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. e-con Systems has proven expertise in integrating our cameras into the NVIDIA platform, including Jetson Xavier NX / Nano / TX2 NX, Jetson AGX Xavier, Jetson AGX Orin, and NVIDIA Jetson Orin NX / NANO.

FRAMOS Launches Event-based Vision Sensing (EVS) Development Kit

[Munich, Germany / Ottawa, Canada , 4 October] — FRAMOS launched the FSM-IMX636 Development Kit, an innovative platform allowing developers to explore the capabilities of Event-based Vision Sensing (EVS) technology and test potential benefits of using the technology on NVIDIA® Jetson with the FRAMOS sensor module ecosystem. Built around SONY and PROPHESEE’s cutting-edge EVS technology,

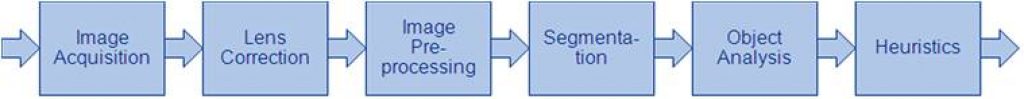

“Optimizing Image Quality and Stereo Depth at the Edge,” a Presentation from John Deere

Travis Davis, Delivery Manager in the Automation and Autonomy Core, and Tarik Loukili, Technical Lead for Automation and Autonomy Applications, both of John Deere, present the “Reinventing Smart Cities with Computer Vision” tutorial at the May 2023 Embedded Vision Summit. John Deere uses machine learning and computer vision (including stereo… “Optimizing Image Quality and Stereo

CircuitSutra Technologies Demonstration of Virtual Prototyping for Pre-silicon Software Development

Umesh Sisodia, President and CEO of CircuitSutra Technologies, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Sisodia demonstrates a virtual prototype of an ARM Cortex-based SoC, developed using SystemC and the CircuitSutra Modelling Library (CSTML). It is able to boot Linux

DeGirum Demonstration of Streaming Edge AI Development and Deployment

Konstantin Kudryavtsev, Vice President of Software Development at DeGirum, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Kudryavtsev demonstrates streaming edge AI development and deployment using the company’s JavaScript and Python SDKs and its cloud platform. On the software front, DeGirum

Cadence Demonstrations of Generative AI and People Tracking at the Edge

Amol Borkar, Director of Product and Marketing for Vision and AI DSPs at Cadence Tensilica, demonstrates the company’s latest edge AI and vision technologies and products at the September 2023 Edge AI and Vision Alliance Forum. Specifically, Borkar demonstrates two applications running on customers’ SoCs, showcasing Cadence’s pervasiveness in AI. The first demonstration is of

From ‘Smart’ to ‘Useful’ Sensors

Can anyone make ‘smart home’ devices useful to consumers? A Calif. startup, Useful Sensors, believes it has the magic potion. What’s at stake? Talk of edge AI, particularly machine learning, has captivated the IoT market. Yet, actual consumer products with local machine learning capabilities, are rare. Who’s ready to pull that off? Will it be

The History of AI: How Generative AI Grew from Early Research

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. From how AI started to how it impacts you today, here’s your comprehensive AI primer When you hear Artificial Intelligence (AI): Do you think of the Terminator or Data on “Star Trek: The Next Generation”? While neither

“Reinventing Smart Cities with Computer Vision,” a Presentation from Hayden AI

Vaibhav Ghadiok, Co-founder and CTO of Hayden AI, presents the “Reinventing Smart Cities with Computer Vision” tutorial at the May 2023 Embedded Vision Summit. Hayden AI has developed the first AI-powered data platform for smart and safe city applications such as traffic enforcement, parking and asset management. In this talk,… “Reinventing Smart Cities with Computer

SiP Market Soars on the Wings of Chiplets and Heterogeneous Integration

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The growth of the SiP market is propelled by the trends in 5G, AI, HPC, autonomous driving, and IoT. OUTLINE The SiP market is forecast to reach US$33.8 billion by 2028, showcasing

AI and the Road to Full Autonomy in Autonomous Vehicles

The road to fully autonomous vehicles is, by necessity, a long and winding one; systems that implement new technologies that increase the driving level of vehicles (driving levels being discussed further below) must be rigorously tested for safety and longevity before they can make it to vehicles that are bound for public streets. The network

Qualcomm Launches Its Next Generation XR and AR Platforms, Enabling Immersive Experiences and Slimmer Devices

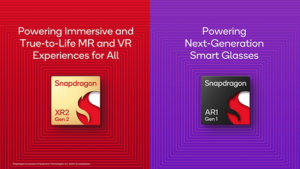

Meta to commercialize both in 2023. Highlights: Snapdragon® XR2 Gen 2 Platform delivers significant performance innovations with 2.5x higher GPU performance and 8x better AI1. Snapdragon AR1 Gen 1 Platform is the first dedicated processor for sleek smart glasses. Both platforms deliver on-device AI, enabling more complex, immersive, and personalized experiences. Qualcomm remains the spatial

Lattice Introduces Industry’s First Small Embedded Vision FPGA with Integrated USB

Extends small, low-power FPGA portfolio with first-in-class FPGA featuring hardened USB for AI & embedded vision applications HILLSBORO, Ore. – September 26, 2023 – Lattice Semiconductor (NASDAQ: LSCC), the low power programmable leader, today announced the Lattice CrossLinkU™-NX FPGA family, the industry’s first FPGAs with integrated USB device functionality in their class. CrossLinkU-NX FPGAs help

“90% of Tech Start-Ups Fail. What the Other 10% Know,” a Presentation from Connected Vision Advisors

Simon Morris, Executive Advisor at Connected Vision Advisors, presents the “90% of Tech Start-Ups Fail. What Do the Other 10% Know?” tutorial at the May 2023 Embedded Vision Summit. Morris is fortunate to have led three tech start-ups with three successful exits. He received a lot of advice along the… “90% of Tech Start-Ups Fail.

Flex Logix Expands Upon Industry-leading Embedded FPGA Customer Base

Company’s award-winning EFLX® eFPGA technology currently used by 20 customers for 40 unique chips MOUNTAIN VIEW, Calif., Sept. 25, 2023 /PRNewswire/ — Flex Logix® Technologies, Inc., the leading supplier of embedded FPGA (eFPGA) IP, announced today that it now has 20 worldwide customers that have licensed the company’s advanced EFLX eFPGA technology architecture for 40

“Introduction to Optimizing ML Models for the Edge,” a Presentation from Cisco Systems

Kumaran Ponnambalam, Principal Engineer of AI, Emerging Tech and Incubation at Cisco Systems, presents the “Introduction to Optimizing ML Models for the Edge” tutorial at the May 2023 Embedded Vision Summit. Edge computing opens up a new world of use cases for deep learning across numerous markets, including manufacturing, transportation,… “Introduction to Optimizing ML Models

“Efficient Neuromorphic Computing with Dynamic Vision Sensor, Spiking Neural Network Accelerator and Hardware-aware Algorithms,” a Presentation from Arizona State University

Jae-sun Seo, Associate Professor at Arizona State University, presents the “Efficient Neuromorphic Computing with Dynamic Vision Sensor, Spiking Neural Network Accelerator and Hardware-aware Algorithms” tutorial at the May 2023 Embedded Vision Summit. Spiking neural networks (SNNs) mimic biological nervous systems. Using event-driven computation and communication, SNNs achieve very low power… “Efficient Neuromorphic Computing with Dynamic

BrainChip Engages VVDN to Deliver Industry’s First Commercial Edge Box Based on Neuromorphic Technology

Laguna Hills, Calif. – September 20, 2023 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI IP, today announced that it has partnered with VVDN Technologies, a premier electronics engineering and manufacturing company, to deliver the industry’s first Edge box based

BrainChip to Participate in the Virtual Tech Conference Series: Emerging Growth in AI, Presented by Maxim Group LLC

Laguna Hills, Calif. – September 20, 2023 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI IP, has been invited to present at the conference on Emerging Growth in AI, presented by Maxim Group LLC, Tuesday, September 26 and Wednesday, September

Inuitive Adopts VeriSilicon’s Advanced ISP IP for its Vision AI Processor

VeriSilicon’s IP solutions optimized for demanding Robotics and AR/VR/MR applications Shanghai, China, September 21, 2023–VeriSilicon (688521.SH) today announced that Inuitive, a leading vision-on-chip processor company, has adopted its dual-channel Image Signal Processor (ISP) IP, featuring low latency and low power capabilities, into its mass-produced NU4100 vision AI processor. This integration brings outstanding imaging and vision